As artificial intelligence becomes increasingly prevalent, researchers at the University of California are actively addressing the issue of bias in algorithms, aiming to mitigate its impact on society. Zubair Shafiq, Associate Professor of Computer Science at UC Davis, is at the forefront of this effort. Prof Shafiq’s recent paper, “Auditing YouTube’s recommendation system for ideologically congenial, extreme, and problematic recommendations” investigates how AI systems can unintentionally reinforce biases and promote problematic content, particularly on social media platforms.

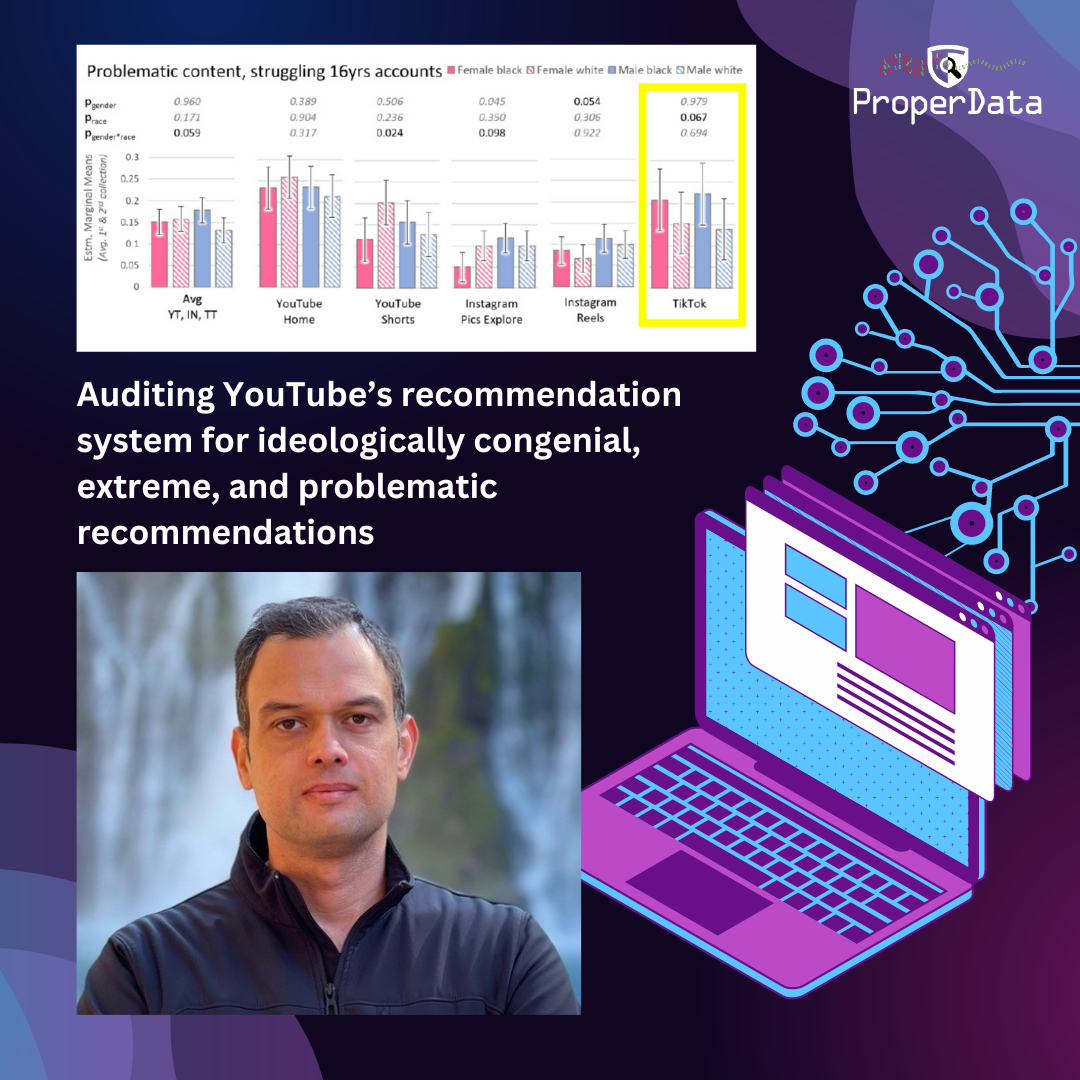

This research has revealed troubling patterns, such as YouTube recommending problematic videos more frequently to users at the extremes of the political spectrum, with the likelihood increasing the longer a user engages with the platform. Additionally, his team found that TikTok displays different content to users based on perceived racial identity, perpetuating biases.

Prof. Shafiq’s proposed solution to combat this bias problem involves both corporate accountability and government regulation. Shafiq advocates for tech companies like YouTube and Meta to consider research findings like his lab’s when designing and modifying their algorithms. He believes that by implementing necessary adjustments, these platforms can reduce bias and promote healthier online environments. In addition to these recommendations, Prof. Shafiq also actively engages with public officials and policymakers to ensure that necessary regulations are in place to address these issues, advocating for accountability and proactive measures to protect users from the potential harms of biased algorithms.

Read UC Davis’ Engineering Communication’s coverage here.

See all ProperData papers on our publications page.